Table of Contents

- Vector Search: Working Principles and Reasons Behind its Status as the Core of Modern AI Search

- What Is Vector Search?

- Why Keyword Search Falls Short

- How Vector Search Works

- Vector Search vs Keyword Search

- Why Vector Search Powers Modern AI Search

- The Role of Vector Databases

- Performance Considerations at Scale

- Why Vector Search Matters for the Future

- FAQs

Technically speaking, vector search is one of the most crucial technologies for implementing an AI-powered search system. There are about 1,800 monthly searches on ‘vector search’ from within the US alone. Clearly, businesses have started to rethink or are in the process of rethinking enterprise search and discovery toward something much more holistic.

Keyword-based search has become inadequate. People begin to type queries in natural language; most of the important information is stored within unstructured data, and high results’ relevance is expected.[1] This allows for vector search because word vectors allow systems to understand at a higher level concept such as meaning or intent—not just words.

Below, we break down what vector search really means, how it works under the hood, and why it’s quickly becoming the core component for modern AI seach , RAG systems ,and intelligent recommendations.

What Is Vector Search?

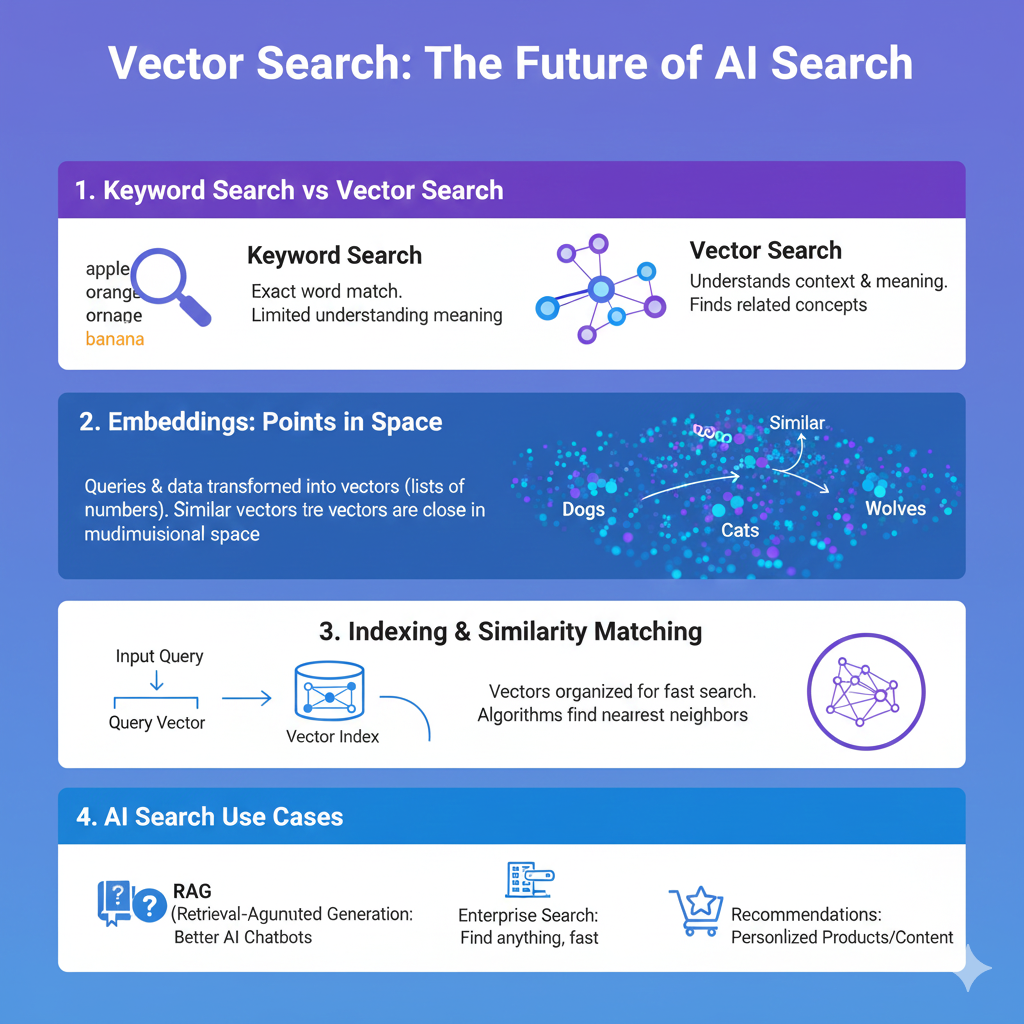

Vector searching is a search technique that returns results based on semantic similarity instead of exact matches for keywords.

It converts the content into embeddings, numerical representations that carry meaning, context, and relationships inside a high-dimensional space where similar items are placed closer to each other rather than treating it as plain text.

This enables retrieval of relevant results even if:

- The query uses different wording than the content

- Synonyms or paraphrases are involved.

- The data is unstructured or multimodal.

In simple words, vector search finds results for what the user means and not just what they type.

Why Keyword Search Falls Short

Keyword-based search depends on exact matches and predefined rules. It may serve the purpose for very straightforward queries, but breaks down in real-world scenarios where:

- The user describes the problem in his own words,

- Content spans across documents, PDFs, FAQs, support tickets

- Terminology varies across teams or regions.

Keyword search cannot understand context, intent, or semantic relationships. As obvious as this seems in the results returned from a large and complex dataset, it becomes more apparent with growing data volumes and increasing complexity.

Vector search was designed to solve these problems.

How Vector Search Works

Although vector search feels seamless to users, it relies on a structured pipeline behind the scenes.

1. Input Processing

<start_of_text>The system pre-processes the raw input data, for example text, images or audio, into some suitable format for embedding models. This ensures consistency across different types of data.

2. Embedding Generation

An embedding model converts the processed input into a vector.

- Text embeddings capture semantic meaning

- Image embeddings capture visual similarity

- Audio embeddings capture sound patterns

Each vector represents the essence of the content in numerical form.

3. Vector Indexing

Vectors are stored in special indexes built particularly for similarity search. The most common types of indexing include:

- Flat exact

- IVF – clustered

- HNSW – fast approximate

This is what makes it possible to search through millions of vectors.

4. Approximate Nearest Neighbor Search

Similarity comparison or matching across large datasets is a sluggish process, if done exactly. ANN (Approximate Nearest Neighbour) algorithms make this process thousands of times faster by reducing the search space to a small portion while still retrieving highly relevant results.

5. Ranking and Results

The candidate results are ranked by a similarity score such as cosine similarity or dot product. Final output may also be appended with some extra filters or business logic.

Vector Search vs Keyword Search

| Keyword-based search | Vector search |

|---|---|

| Matches exact words | Matches meaning |

| Sensitive to phrasing | Handles paraphrases |

| Limited context awareness | Understands intent |

| Poor with unstructured data | Designed for unstructured data |

That is the difference due to which vector search has become the backbone of AI-driven search experiences.

Why Vector Search Powers Modern AI Search

Vector search enables capabilities that traditional search cannot support.

AI-Powered Site and Enterprise Search

Vector search improves relevance across websites, knowledge bases, and internal tools by understanding intent instead of matching keywords.

RAG (Retrieval-Augmented Generation)

RAG systems depend on vector search to retrieve accurate context before an LLM generates a response. This significantly reduces hallucinations and improves answer quality.

Recommendation Engines

With vectors of products, content and users, recommendations become more personal and better suited to the context.

Multimodal Search

Vector embeddings permit text, image, and audio files all to occupy identical semantic space which empowers a highly potent multimodal search experience.

AI-powered site search with instant autocomplete

Deliver lightning-fast search experiences with ExpertRec

ExpertRec helps you implement intelligent autocomplete, typo-tolerant search, personalized results, and scalable server-side search — all without complex infrastructure or heavy development effort.

Quick setup • No coding required • Works on any website

The Role of Vector Databases

Conventional databases were never designed for high-dimensional similarity searches. Vector databases are specifically optimized for storing embeddings as well as conducting rapid ANN queries at massive scale.

Some of the most popular vector databases are FAISS, Milvus, Pinecone, and Weaviate. There are also search engines that support hybrid lexical and vector search.

The right choice of indexing strategy and database architecture makes a huge difference to performance, scalability, and cost efficiency.

Performance Considerations at Scale

Several factors determine vector search performance:

- Latency requirements: In most applications, results are expected within milliseconds.

- Dimensionality of embeddings: Higher dimensions correspond to better accuracy but at a higher cost.[24]

- Model selection: Domain specific models frequently out-perform general purpose ones

- Infrastructure design: Sharding, compression, and distributed indexing are essential at scale

The balancing of accuracy with speed and resource usage is the key to a successful production deployment.

Why Vector Search Matters for the Future

As user expectations move toward conversational, intent-driven interactions, vector search is no longer optional—it’s foundational.

More users are searching for it and more developers are adopting it as the preferred method of implementing AI search, RAG systems, and personalization engines. Vector search is the future of information retrieval. It provides that connection layer between raw data and some form or type or concept result.

For businesses building AI-powered search experiences, vector search is the difference between showing results and delivering understanding.

Next steps (choose as appropriate):

- Add Expertrec specific examples

- Further optimize this for conversion and internal linking

To create a pillar and cluster SEO structure, start by developing a central piece of content, or “pillar,” that covers a

FAQs

What is vector search?

Vector search is a search technique that retrieves results based on semantic similarity rather than exact keyword matches.

Why is keyword search no longer sufficient?

Keyword-based search depends on exact matches and cannot understand intent, context, or semantic relationships, especially in unstructured data.

How does vector search improve AI-powered search?

Vector search enables systems to understand meaning and intent, improving relevance for AI search, RAG systems, and recommendations.

What role do vector databases play in vector search?

Vector databases are optimized for storing embeddings and performing fast Approximate Nearest Neighbor searches at scale.

Why is vector search important for the future?

As search becomes more conversational and intent-driven, vector search provides the foundational layer that connects raw data with meaningful results.